PhD in Applied and Computational Math graduated from the University of South Carolina. My advisors are Prof. Lili Ju and Prof. Zhu Wang. Before that, I did my undergraduate studies at Wuhan University, where I worked with Prof. Xiaoping Zhang.

My PhD research focused on Deep Learning based methods for Scientific Computing. I am now a Quantitative Analytics Specialist at Wells Fargo.

No difference, I care or not.

Link to [Google Scholar]

A Deep Learning Method for the Dynamics of Classic and Conservative Allen-Cahn Equations Based on Fully-Discrete Operators

Yuwei Geng, Yuankai Teng, Zhu Wang, Lili Ju

Journal of Computational Physics (JCP) [JCP] [code]

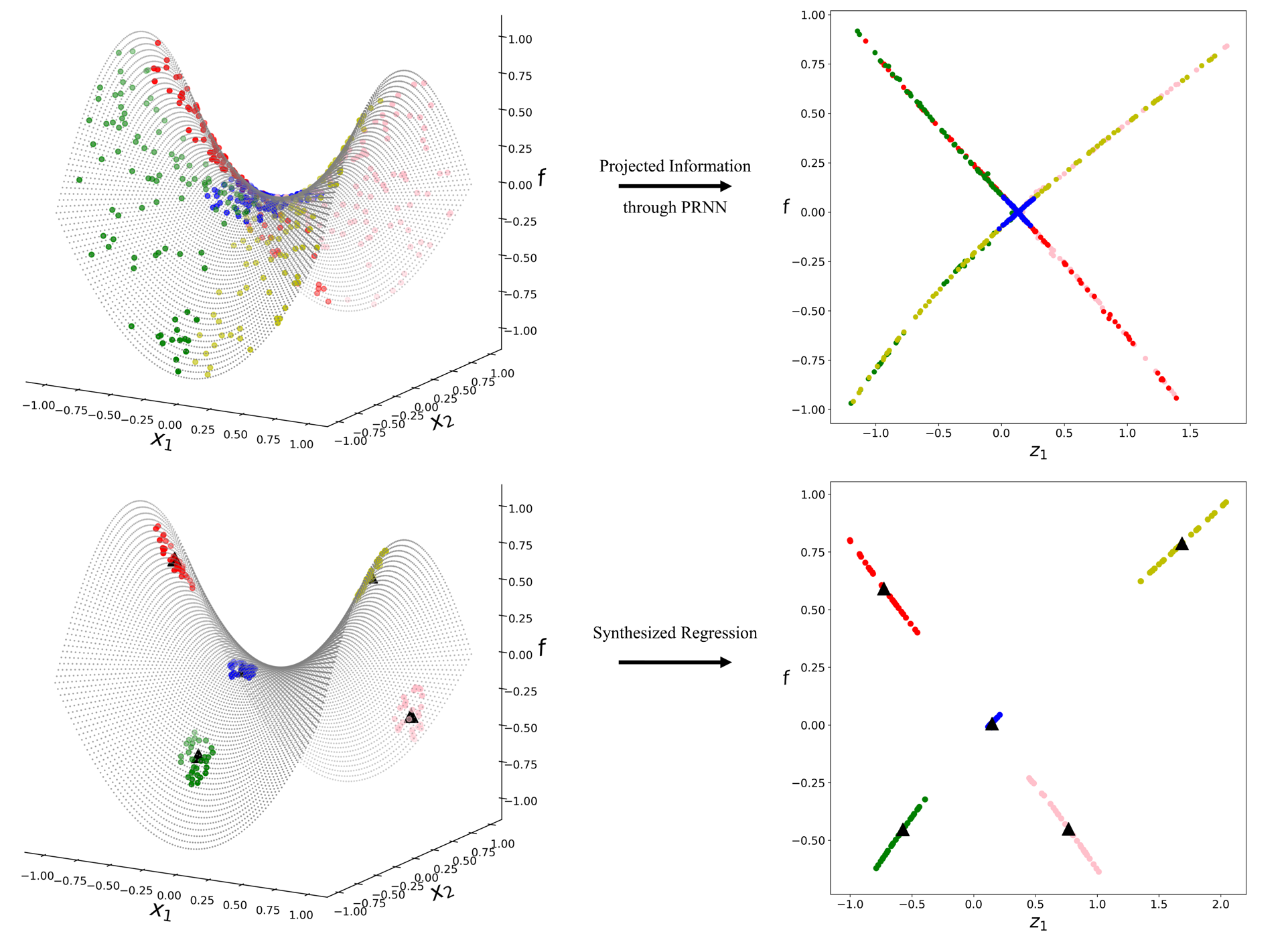

Level Set Learning with Pseudo-Reversible Neural Networks for Nonlinear Dimension Reduction in Function Approximation

Yuankai Teng, Zhu Wang, Lili Ju, Anthony Gruber, Guannan Zhang

SIAM Journal on Scientific Computing (SISC) [SIAM] [code]

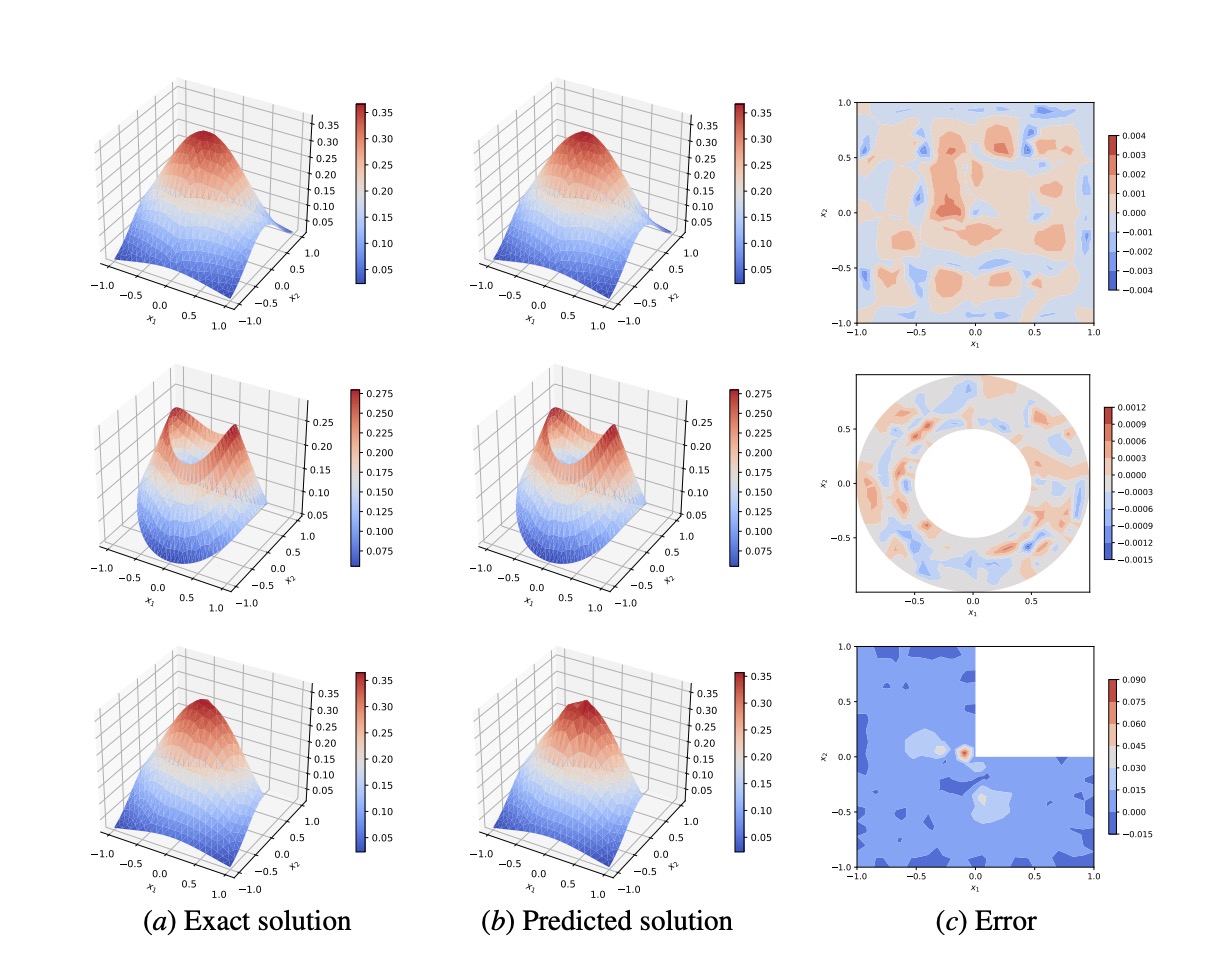

Learning Green's Functions of Linear Reaction-Diffusion Equations with Application to Fast Numerical Solver

Yuankai Teng, Xiaoping Zhang, Zhu Wang, Lili Ju

Proceedings of Third Mathematical and Scientific Machine Learning Conference (MSML'2022). [MSML22] [code]

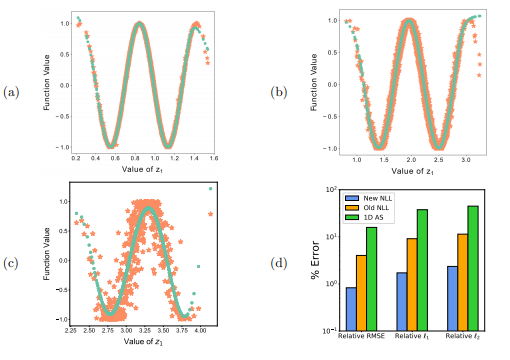

Nonlinear Level Set Learning for Function Approximation on Sparse Data with Applications to Parametric Differential Equations

Anthony Gruber, Max Gunzburger, Lili Ju,Yuankai Teng, Zhu Wang

Numer. Math. Theor. Meth. Appl. (2021). [DOI]

Interactive Binary Image Segmentation with Edge Preservation

Jianfeng Zhang, Liezhuo Zhang,Yuankai Teng, Xiaoping Zhang, Song Wang, Lili Ju

[arXiv]

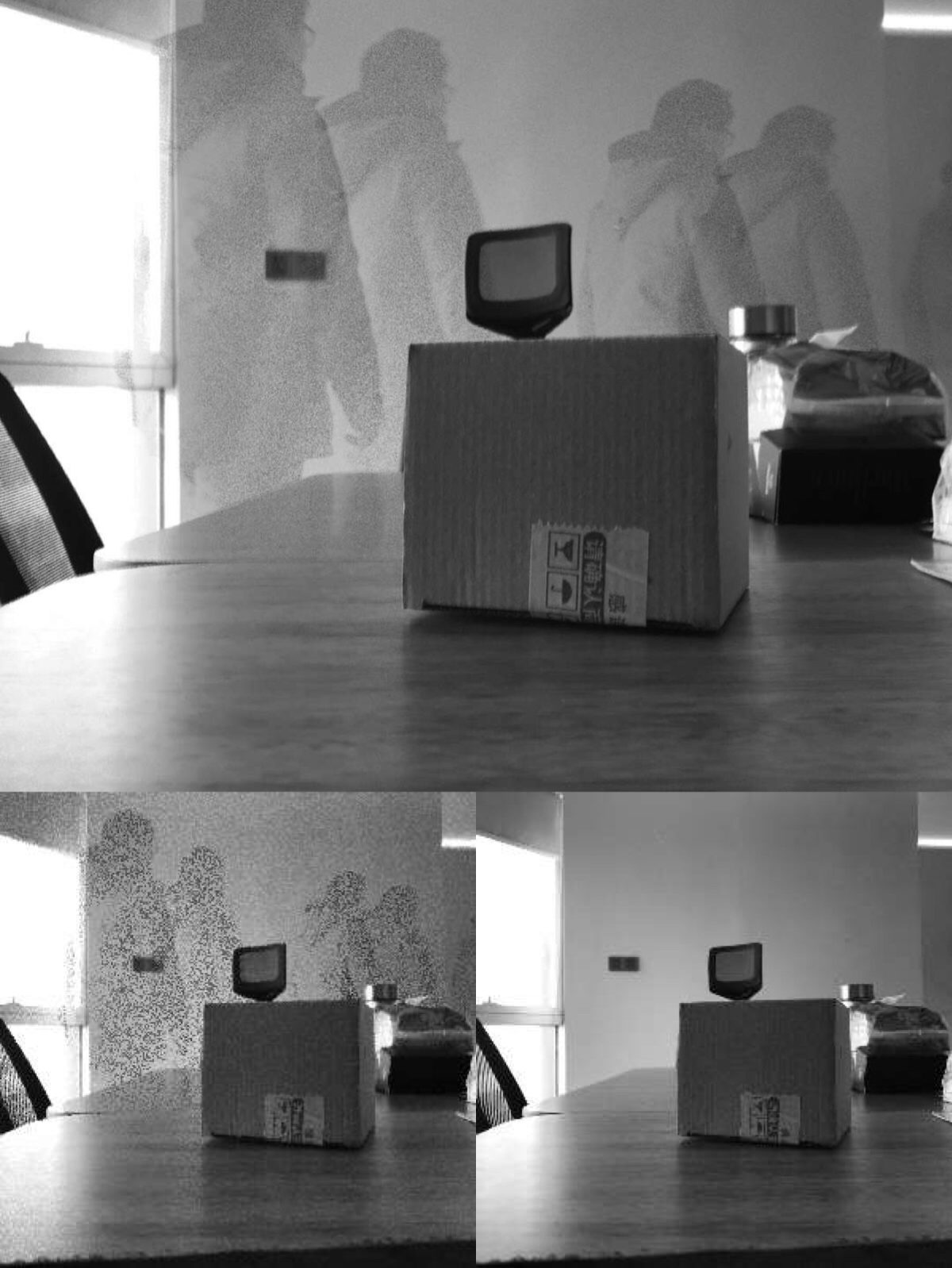

Low Frequency Objects In Unchanged Background Elimination Algorithm [Code]

I use RANSAC(Random Sample Consensus) method to eliminate the useless objects which randomly show in a series time-continuous unchanged background images. In this project I take the noise images as INPUT and give the modified clear background images as OUTPUT. The modified pictures will decline the noises which occurs in objects' 3D reconstruction by AI3D. The code is available in my github.

And this work is done during my internship in Farsee2 Technology.